Why DNN Loss Landscapes aren't Convex

Introduction

I was speaking to a friend recently about model complexity, when I remarked that model loss landscapes weren’t convex. The loss landscape has “tracks” that you can smoothly change your model’s parameters along without changing its loss:

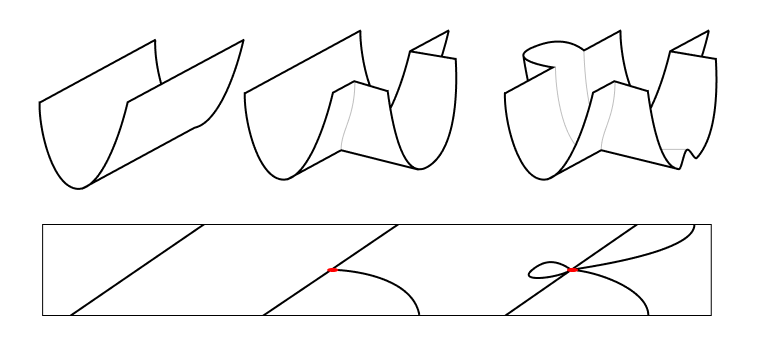

There’s two reasons why:

- Standard neural network architectures allows multiple sets of weights to implement the same “function.”Roughly speaking, imagine increasing a weight in a layer and then reducing the corresponding weights in the next layer.

- Model training creates additional directions of travel where different implemented functions perform equally well on the dataset and have the same loss.

Example of case 1:

Let’s say we have two layers, denoted by (W1, b1) and (W2, b2).

Shapes:

- x (dim_in)

- w1 (dim_in, dim_l1)

- b1 (dim_l1)

- w2 (dim_l1, dim_l2)

- b2 (dim_l2)

- a (dim_l1)

- y (dim_l2)

a=relu(xW1+b1)

y=aW2+b2

Writing out the sums:

aj=relu(b1j+∑ixiw1ij) yk=b2k+∑jajw2jkFor a given c, Let’s add ν to b1c.

ac=relu(b1c+(∑ixiw1ic)+ν) yk=b2k+(∑j≠cajw2jk)+acw2ckIf relu(b1j+∑ixiw1ij)=0 and relu(b1c+(∑ixiw1ic)+ν)=0:

- There’s no impact on the function by changing ν, since the relu kills the change.

If ac increases by ν:

- We can maintain yk by reducing b2k by ν or modifying w2ck.

Things get a little more tricky when part of the ν increase is cut off by the relu boundary, but you can informally always reduce b2k by the same amount as ac increases to maintain the same function y(x).