Gradient Estimation: Training What You Can't Backprop Through

Introduction

Neural networks work well through the magic of backpropagation, but there are times when we can’t backpropagate through our layers. Let’s imagine we have a simple problem: We have a Mixture of Experts (MoE) model with a probabilistic routing layer.

To be specific, our routing layer selects one expert to route its computation through.

graph TD A[Input] --> B{Router} B -->|Route 1| C[Expert 1] B -->|Route 2| D[Expert 2] B -->|Route 3| E[Expert 3] C --> F[Output] D --> F E --> FWhy DNN Loss Landscapes aren't Convex

Introduction

I was speaking to a friend recently about model complexity, when I remarked that model loss landscapes weren’t convex. The loss landscape has “tracks” that you can smoothly change your model’s parameters along without changing its loss:

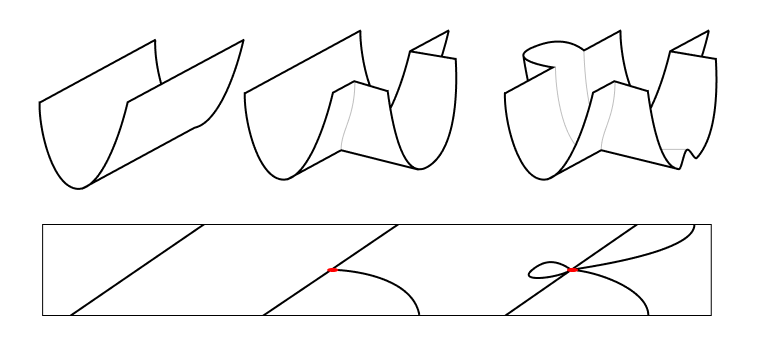

There’s two reasons why:

- Standard neural network architectures allows multiple sets of weights to implement the same “function.”Roughly speaking, imagine increasing a weight in a layer and then reducing the corresponding weights in the next layer.

- Model training creates additional directions of travel where different implemented functions perform equally well on the dataset and have the same loss.

Introduction to Inverse RL: BC and DAgger.

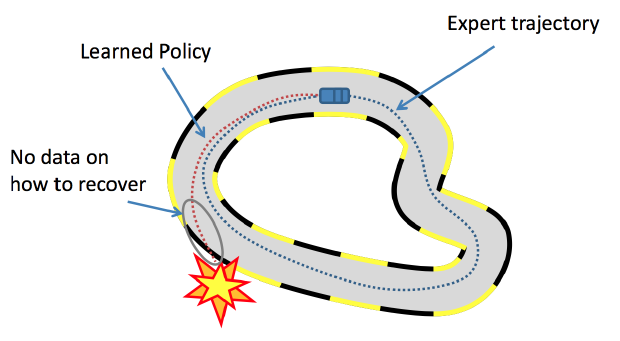

Behavioral Cloning

We’re given a set of expert demonstrations $\xi \in \Xi$ to determine a policy $\pi$ that imitates the expert, $\pi^*$. In behavioral cloning, we accomplish this through simple supervised learning techniques, where the difference between the learned policy and expert demonstrations are minimized with respect to some metric.

Concretely, the goal is to solve the optimization problem:

\[\hat{\pi}^* = \underset{\text{argmin}}{\pi} \sum_{\xi \in \Xi} \sum_{x \in \xi} L(\pi(x), \pi^*(x))\]where $L$ is the cost function, $\pi^ * (x)$ is the expert’s action at state $x$, and $\hat{\pi}^*$ is the approximated policy.

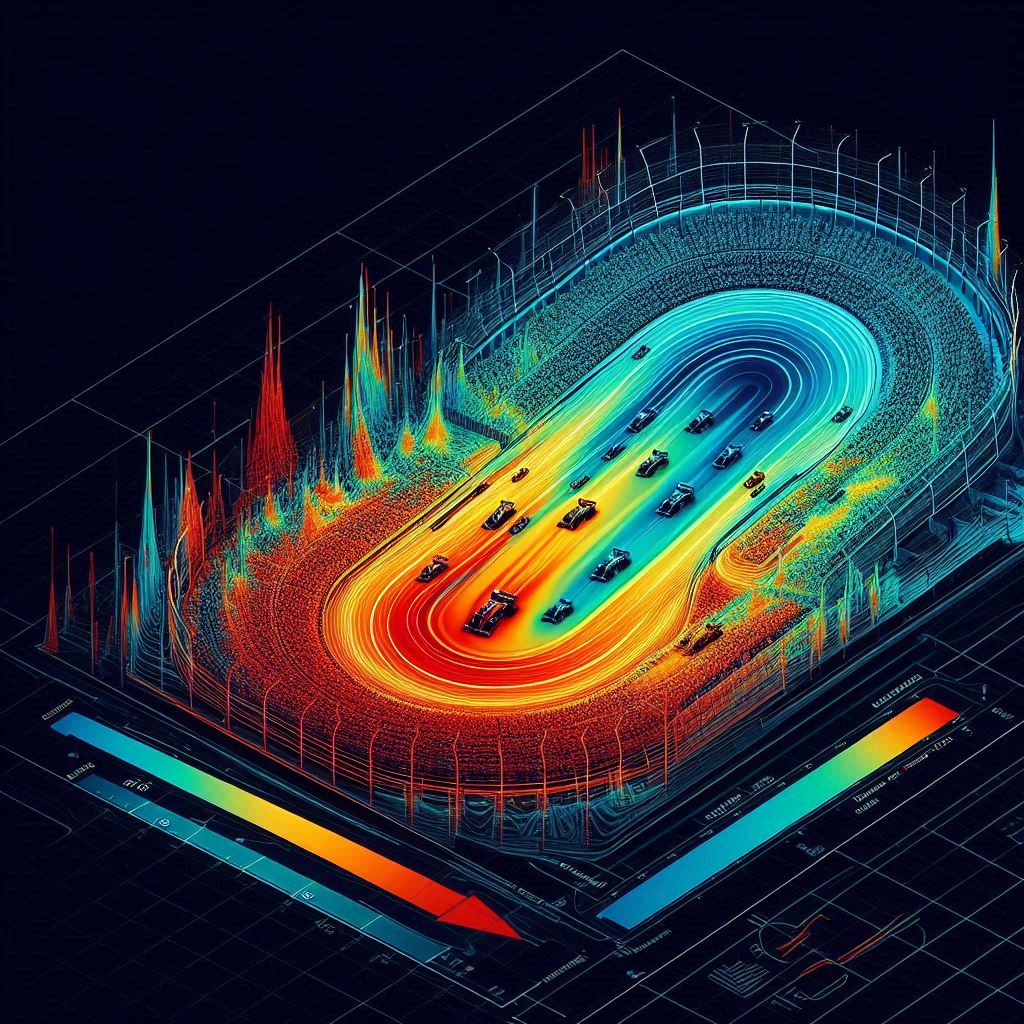

Robust Inverse RL, Explained

Inverse RL has the problem of compounding error. How do we fix this?

RAMBO-RL is an offline RL algorithm that is based on three primary ideas:

- There’s a small random shift between the experience samples we have and the underlying distribution, so we’d like to train our offline RL models to have good worst-case performance instead of good average-case performance.

- Adding an adversary makes the model’s worst-case performance robust.

- We can embed this adversary into the state transition model.

Got more time? Read on.

subscribe via RSS